Brain-Computer Interfaces

I was reading a paper on a combination of brain-computer interfaces (BCI) and generative adversarial networks (GAN) a few days ago and thought I should write an introductory article on what BCIs are and how they work. This topic is an elegant example of how machine learning can truly affect one's quality of life. I personally really enjoy this topic and have spent my master's studying and working on this field.

What is a brain-computer interface?

A BCI system is a communication pathway that does not require any muscular activity but rather is dependent exclusively on neural activities [1]. As such, a BCI may be a suitable alternative access pathway for people with severe motor impairments, due to conditions such as amyotrophic lateral sclerosis (ALS), brain stroke, cervical spinal cord injury, cerebral palsy (CP), or muscular dystrophies [2].

How does a BCI work?

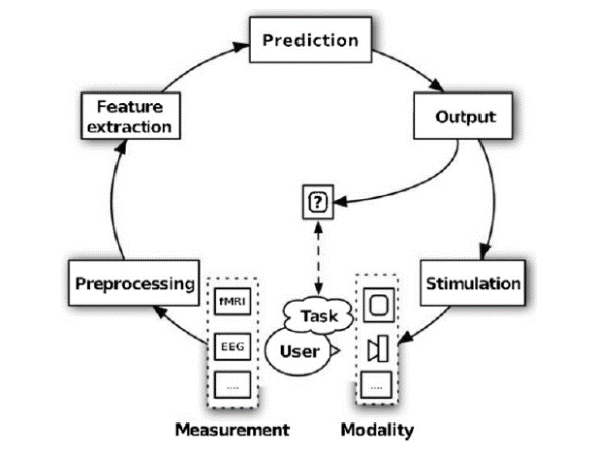

The first step in a BCI cycle is to measure the brain signals through electroencephalography (EEG) or near-infrared spectroscopy (NIRS). These modalities are dependent on the application of the BCI and the mental task used for control. EEG measures the summation of electrical activity at the scalp caused primarily by synaptic activity in the upper layers of the cortex [3] whereas. NIRS is an optical spectroscopy method that measures the hemodynamic response during neural activity by irradiating near-infrared light through the skull [4]. The next step is pre-processing, which is necessary due to low signal-to-noise ratio (SNR) of the brain signals. The SNR is low because the signals cross various skull layers and are contaminated by background noise from inside the brain and externally over the scalp [5], [6]. This step will maximise the probability of detecting task-related brain activity. After pre-processing, discriminative features must be extracted, which is very challenging as there are many irrelevant and confounding brain activities [7]. Feature engineering is critical to avoiding the curse of dimensionality, that is, to create a lower dimension feature vector without relevant information loss [8]. The implementation of the 4 classification step depends on the application and data; here, one algorithmically categorises the mental state of the user on the basis of the extracted features. The detected mental state is subsequently used to control an external device, such as a wheelchair, a speller, etc. The BCI cycle concludes with the user’s perception of the output. The figure below provides a schematic summary of the BCI cycle.

BCI Types and Control Signals

BCIs can be either invasive or non-invasive. Although invasive BCIs offer substantively higher signal-to-noise ratio and spatial resolution, their clinical translation to date, is very limited.

Non-invasive BCIs can be grouped into two groups based on what brain signals are used as control. One is active (endogenous) BCI, the other is reactive (exogenous) BCI. The former requires the user to actively engage in a cognitive activity for example performing mental arithmetic or imagining the movement of limbs. On the other hand, exogenous BCIs rely on the brain activity associated with the user’s natural reaction to an external stimulus e.g. looking at flashing LEDs or pictures on the computer screen [9].

Limitations

The main limitation is that the hardware isn't quite there yet. The setup is yet to be designed to become suitable for a day-to-day use case where a caregiver can easily setup the equipment without the need of a technician. The wet EEG caps for example require conductive gel to help for receiving better signals and it's not as simple as just putting the cap on as you need to connect the desired electrodes and make sure the connectivity is good enough. Although there are wireless and dry (do not need gel) caps on the market they are not as comfortable and the signal quality isn't as great. Also, the measurement is quite sensitive to noise, e.g. head/mouth movements- although the effect could be mitigated through preprocessing techniques to some extent. In addition the BCI systems need to provide more control to the user, e.g. when they want to attend to stimuli, etc.

Another limitation is the task irrelevancy in case of some active BCIs, for instance the user may be asked to do addition in their head to move their wheelchair to right and think of a song for left. This is mainly due to the fact that the brain signals that are generated from performing vastly different tasks are easier to distinguish from one another. However, some recent research have challenged this limitation [10]

Conclusion

BCIs are the intersection between human and computer brains. Despite the limitations this field is truly impactful and it is only a matter of time for such technology to get to a state where it can be used widely on daily basis by those in need of it.

Checkout my video where I summarise my thesis on BCIs in one minute! https://www.youtube.com/watchv=wt3Xj7BNQZs&feature=emb_title

If you enjoy learning about BCIs, machine learning and natural language processing follow me on twitter for more content!

References:

[1] A. Kübler, B. Kotchoubey, J. Kaiser, J. R. Wolpaw, and N. Birbaumer, “Brain–computer communication: Unlocking the locked in.,” Psychol. Bull., vol. 127, no. 3, p. 358, 2001.

[2] A. Rezeika, M. Benda, P. Stawicki, F. Gembler, A. Saboor, and I. Volosyak, “Brain–Computer Interface Spellers: A Review,” Brain Sci., vol. 8, no. 4, p. 57, 2018

[3] E. J. Speckman, C. E. Elger, and A. Gorji, “Neurophysiologic Basis of EEG and DC Potentials,” pp. 1–16.

[4] S. M. Coyle, T. E. Ward, and C. M. Markham, “Brain-computer interface using a simplified functional near-infrared spectroscopy system.,” J. Neural Eng., vol. 4, no. 3, pp. 219–226, 2007.

[5] J. R. Wolpaw et al., “Brain-computer interface technology: a review of the first international meeting,” IEEE Trans. Rehabil. Eng., vol. 8, no. 2, pp. 164–173, 2000.

[6] S. Moghimi, A. Kushki, A. Marie Guerguerian, and T. Chau, “A review of EEG-Based brain-computer interfaces as access pathways for individuals with severe disabilities,” Assist. Technol., vol. 25, no. 2, pp. 99–110, 2013.

[7] L. F. Nicolas-Alonso and J. Gomez-Gil, “Brain computer interfaces, a review,” Sensors, vol. 12, no. 2, pp. 1211–1279, 2012

[8] J. Wang, G. Xu, L. Wang, and H. Zhang, “Feature extraction of brain-computer interface based on improved multivariate adaptive autoregressive models,” in 2010 3rd International Conference on Biomedical Engineering and Informatics, 2010, vol. 2, pp. 895–898.

[9] T. O. Zander and C. Kothe, “Towards passive brain-computer interfaces: Applying brain-computer interface technology to human-machine systems in general,” J. Neural Eng., vol. 8, no. 2, 2011.

[10] A. R. Sereshkeh, R. Trott, A. Bricout and T. Chau, “Online EEG classification of covert speech brain–computer interfacing”, Int. J. Neural Syst.27(8) (2017) 1750033.